I've recently engaged in a project for my Spatial Data Services, Sources, Standards and Infrastructures course, taught by Prof. Michael Gould. The goal of the endeavor was to create an open data portal using Esri ArcGIS Hub. My group is focused on developing a hub centered on air pollution data (particularly PM2.5 and NO2) in Madrid. During a group meeting, while exchanging ideas, we considered incorporating Overture Maps Foundation data into the hub, especially since we had recently covered Overture during our lectures, more so, the data will be useful for future studies and applications. I volunteered to take on the task, as I am interested in working on such projects.

Essentially, I was tasked with retrieving the Overture data for Madrid and publishing it as a service on our hub. In this article, I will show you how to do the first part in ArcGIS Pro.

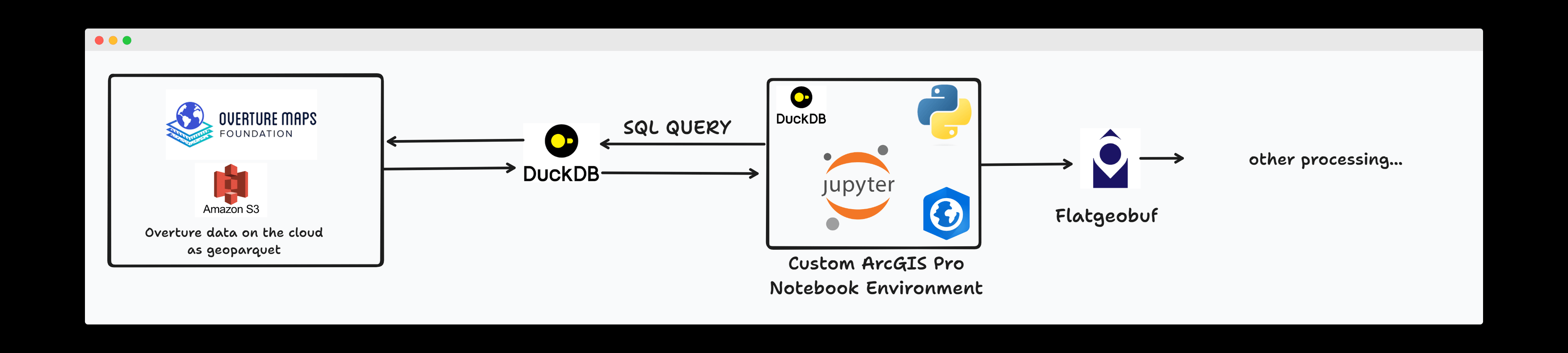

The Architecture

The architecture above covers part of what we intend to achieve. We need to get the data from Overture, extract useful information in ArcGIS Pro and publish as feature layers on ArcGIS Online and eventually the hub.

Pre-requisites

ArcGIS Pro Desktop Software - The ArcGIS Pro Software version used was 3.1.3. (If you don't have access to ArcGIS Pro, I think you should be able to use any jupyter notebook/python environment).

Step 1 - Installing Duck db in ArcGIS Pro

ArcGIS Pro is bundled with it's own package management system named 'conda' and a default python environment (arcgispro-py3), that you can't modify. Conda provides access to a large collection of popular python packages such pandas, geopandas, pyshp etc and makes it easy to install a new package in ArcGIS Pro. However the package you need may not be available in the package repository. For instance, searching for 'duckdb' yields no results, as shown in the GIF below.

To solve this, you need to clone the default environment. With the cloned environment, you will be able to install new packages. This is tantamount to creating a new virtual environment in python.

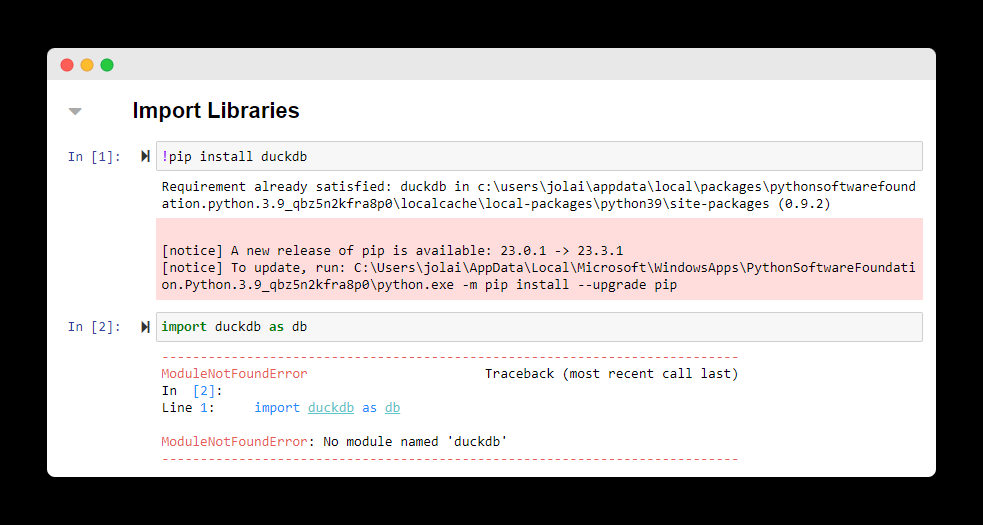

Note: You can install a python package in the default environment, however you won't be able to import and use it. See below:

In my case, the requirement was already satisfied because I have it installed. But in yours, it will install but you won't be able to use it. Moving on. 🏃

1.1 Launch ArcGIS Pro as an Administrator

Before cloning the default environment, make sure to launch ArcGISPro as an administrator, otherwise you will experience some permission errors (tested on Windows) when cloning the environment.

1.2 Cloning the ArcGIS Notebook Environment

To clone the environment, you need to open the Package Manager. If you're on the homepage, you can access the Package Manager from the Settings tab in the side panel. If you're within a project already, you can access the Package manager by clicking on the Options Menu -> Package Manager.

The next step is to clone the environment. Follow the steps in the GIF below to clone the default environment in the Package Manager.

This process will take a few minutes. At this point you can grab a coffee and check back. When the process is completed, your new environment will be listed in the Environment Manager.

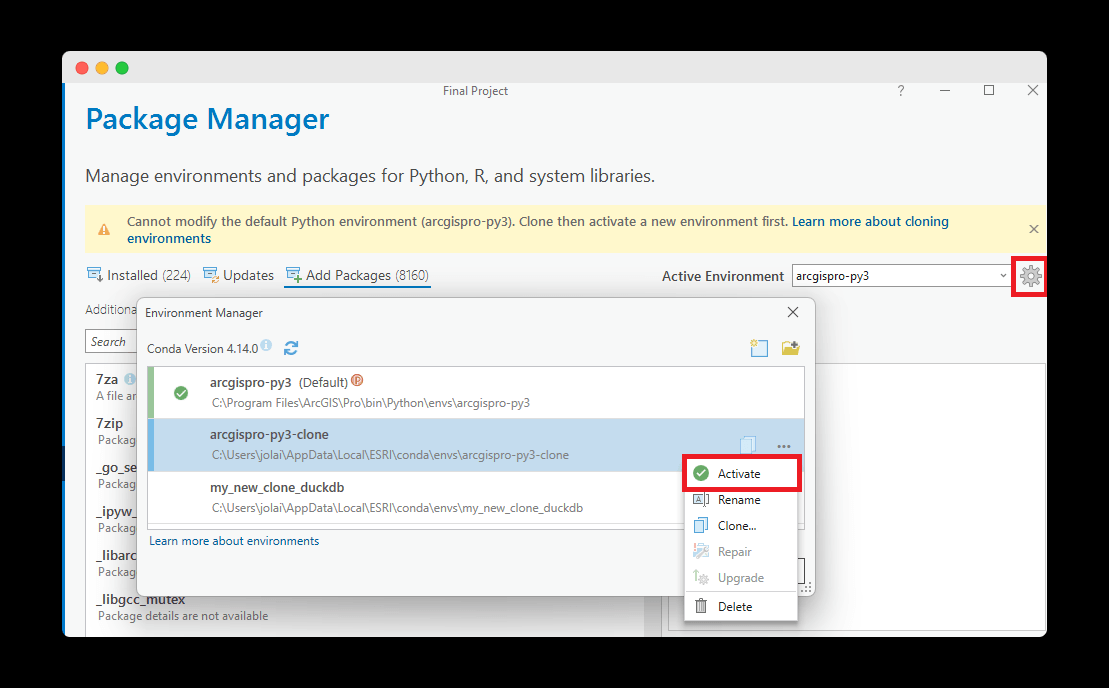

1.3 Activate the Cloned Environment

Click on the ⚙️ icon , it will open the Environment Manager dialog. Select the environment you just created and click activate. Restart your ArcGIS Pro to effect the changes.

1.4 Install Duck Db

To install duckdb, navigate to the Start Menu -> All apps -> Expand the ArcGIS folder -> Open the Python Command Prompt and run the command below. Also see the GIF below for reference.

# install duckdb - or any other python packages

pip install duckdbImport duckdb in the Notebook and confirm there are no errors.

Step 2 - Query Overture Data with Duck DB

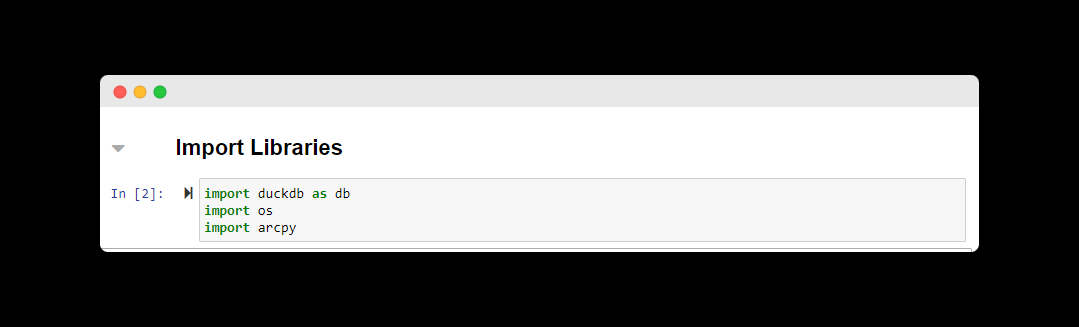

2.1 Import DuckDB and Arcpy

import duckdb as db

import os

import arcpy2.2 Get the Study Area Extent with Arcpy

# replace this with the path to your working directory

working_directory='...'

data_dir = working_directory + '\\data'

madrid_boundary = "Madrid Boundary"

# switch to the data directory

os.chdir(working_directory)

# get the current project

aprx = arcpy.mp.ArcGISProject("CURRENT")

# get the Map of interest

map = aprx.listMaps('Map')[0] # 'Map' is the name of the Map

# get the madrid boundary layer in the Map

madrid_feature_class = map.listLayers(madrid_boundary)[0]

# some validation to confirm it's the right layer

assert madrid_boundary == madrid_feature_class.name

# extract the bounding extent

extent = arcpy.Describe(madrid_feature_class).extent

# store the bounding box of the study area in a dictionary

MADRID_BBOX={

"minx":extent.XMin,

"maxx":extent.XMax,

"miny":extent.YMin,

"maxy":extent.YMax

}2.3 Define some Utility Functions and Constants

# the current release version

OVERTURE_RELEASE_VERSION ="2023-11-14-alpha.0"

def get_theme_address(theme):

"""

Utility function to construct the s3 bucket location for the provided overture data theme.

"""

return f's3://overturemaps-us-west-2/release/{OVERTURE_RELEASE_VERSION}/theme={theme}/type=*/*'2.4 Setup DuckDB

# Initial setup

con = db.connect()

# To perform spatial operations, the spatial extension is required.

# src - https://duckdb.org/docs/api/python/overview.html#loading-and-installing-extensions

con.install_extension("spatial")

con.load_extension("spatial")

# To load a Parquet file from S3, the httpfs extension is required.

# src - https://duckdb.org/docs/guides/import/s3_import.html

con.install_extension("httpfs")

con.load_extension("httpfs")

# Tell DuckDB which S3 region to find Overture's data bucket in

# src - https://github.com/OvertureMaps/data/blob/main/README.md#how-to-access-overture-maps-data

con.sql("SET s3_region='us-west-2'")2.5 DuckDB Query to Download the Buildings Data

buildings_file_name = 'buildings.fgb'

con.sql(f"""

COPY (

SELECT

type,

version,

CAST(updatetime as varchar) as updateTime,

height,

numfloors as numFloors,

level,

class,

JSON(names) as names,

JSON(sources) as sources,

ST_GeomFromWKB(geometry) as geometry

FROM

read_parquet('{get_theme_address('buildings')}', hive_partitioning=1)

WHERE

bbox.minx > {MADRID_BBOX["minx"]}

AND bbox.maxx < {MADRID_BBOX["maxx"]}

AND bbox.miny > {MADRID_BBOX["miny"]}

AND bbox.maxy < {MADRID_BBOX["maxy"]}

) TO '{buildings_file_name}'

WITH (FORMAT GDAL, DRIVER 'FlatGeoBuf');

""")2.6 DuckDB Query to Download the Places Data

places_file_name = 'places.fgb'

con.sql(f"""

COPY (

SELECT

id,

updatetime,

version,

CAST(names AS JSON) AS names,

CAST(categories AS JSON) AS categories,

confidence,

CAST(websites AS JSON) AS websites,

CAST(socials AS JSON) AS socials,

CAST(emails AS JSON) AS emails,

CAST(phones AS JSON) AS phones,

CAST(brand AS JSON) AS brand,

CAST(addresses AS JSON) AS addresses,

CAST(sources AS JSON) AS sources,

ST_GeomFromWKB(geometry)

FROM

read_parquet('{get_theme_address('places')}', hive_partitioning=1)

WHERE

bbox.minx > {MADRID_BBOX["minx"]}

AND bbox.maxx < {MADRID_BBOX["maxx"]}

AND bbox.miny > {MADRID_BBOX["miny"]}

AND bbox.maxy < {MADRID_BBOX["maxy"]}

) TO '{places_file_name}'

WITH (FORMAT GDAL, DRIVER 'FlatGeoBuf');

""")2.7 DuckDB Query to Download the Transportation Data

con.sql(f"""

COPY (

SELECT

theme,

type,

subType,

updateTime,

version,

CAST(road AS JSON) AS road,

CAST(connectors AS JSON) AS connectors,

ST_GeomFromWKB(geometry)

FROM

read_parquet('{get_theme_address('transportation')}', hive_partitioning=1)

WHERE

bbox.minx > {MADRID_BBOX["minx"]}

AND bbox.maxx < {MADRID_BBOX["maxx"]}

AND bbox.miny > {MADRID_BBOX["miny"]}

AND bbox.maxy < {MADRID_BBOX["maxy"]}

)

TO '{roads_filename}'

WITH (FORMAT GDAL, DRIVER 'FlatGeoBuf')

""")2.8 DuckDB Query to Download the Base Data

#base layers are landuse, waterbodies etc

base_layers_filename = 'landuse.fgb'

con.sql(f"""

COPY (

SELECT

theme,

type,

subType,

class,

updateTime,

version,

CAST(names AS JSON) AS names,

CAST(sourceTags AS JSON) AS sourceTags,

CAST(sources AS JSON) AS sources,

ST_GeomFromWKB(geometry)

FROM

read_parquet('{get_theme_address('base')}', hive_partitioning=1)

WHERE

bbox.minx > {MADRID_BBOX["minx"]}

AND bbox.maxx < {MADRID_BBOX["maxx"]}

AND bbox.miny > {MADRID_BBOX["miny"]}

AND bbox.maxy < {MADRID_BBOX["maxy"]}

) TO '{base_layers_filename}'

WITH (FORMAT GDAL, DRIVER 'FlatGeoBuf');

""")2.9 Clean up - Close the Duck DB connection

# explicitly close the connection

con.close()2.10 Check your Data

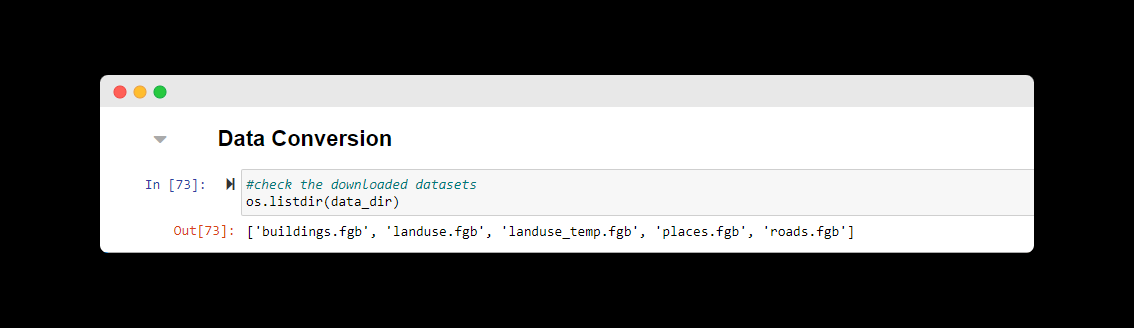

#check the downloaded datasets

os.listdir(data_dir)Note: Get rid of temporary files. Duck DB creates a temporary file and for some reasons, it does not delete it when the download is completed. As you can see above, the

'landuse_temp.fgb'is the temporary file for the actual 'landuse.fgb' data.Downloading may take a while. This depends on your system configuration, network speed, and how large your study area is.

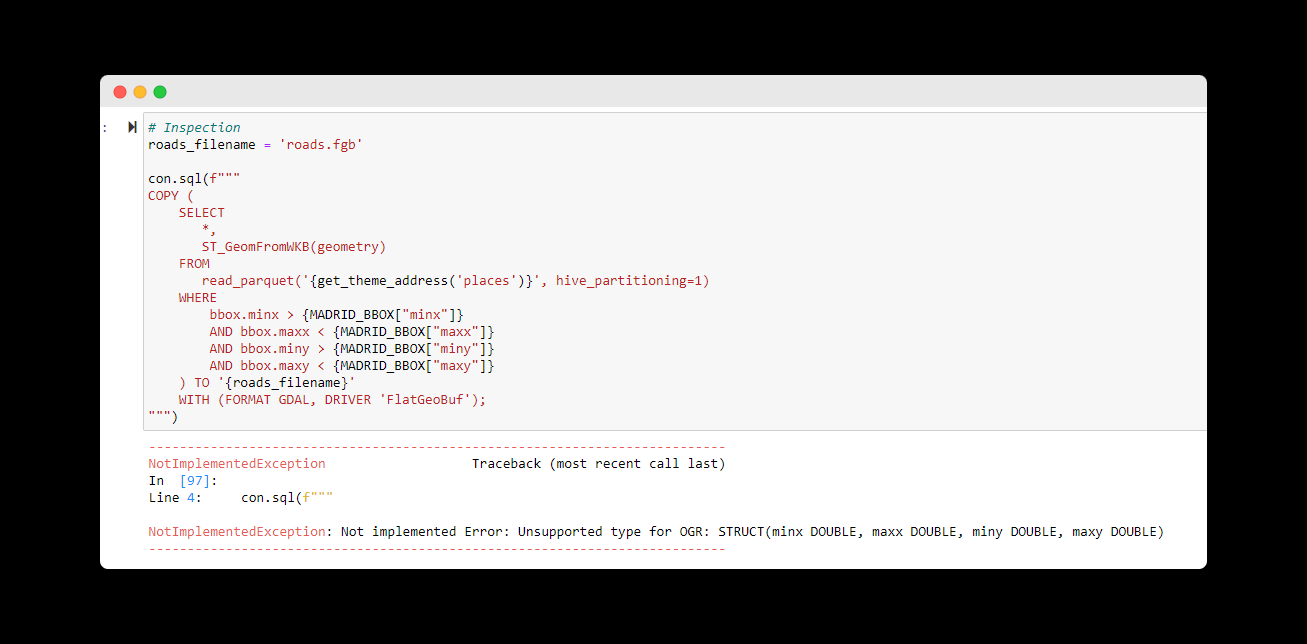

Gotchas

Undoubtedly, I ran into multiple errors when writing the queries. One of them is shown below:

This was because I was trying to fetch all the columns using the SELECT * query, so I approached it like this:

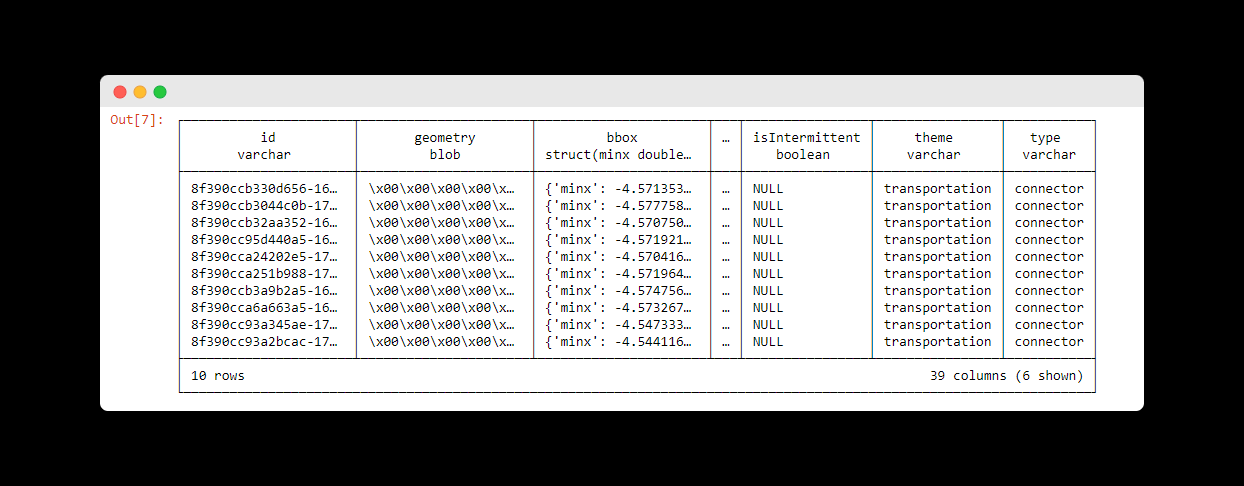

Preview the data from Overture using duckdb and LIMIT to only 10 results.

con.sql(f"""

SELECT

*

FROM

read_parquet('{get_theme_address('transportation')}', hive_partitioning=1)

WHERE

bbox.minx > {MADRID_BBOX["minx"]}

AND bbox.maxx < {MADRID_BBOX["maxx"]}

AND bbox.miny > {MADRID_BBOX["miny"]}

AND bbox.maxy < {MADRID_BBOX["maxy"]}

LIMIT 10

""")If you run the duckdb query above, you will get the response above. As you can see the data type for the bbox column is a struct. So the solution I employed was to select only the columns I need, and to CAST the struct fields to JSON, as you might have noticed in the queries above.

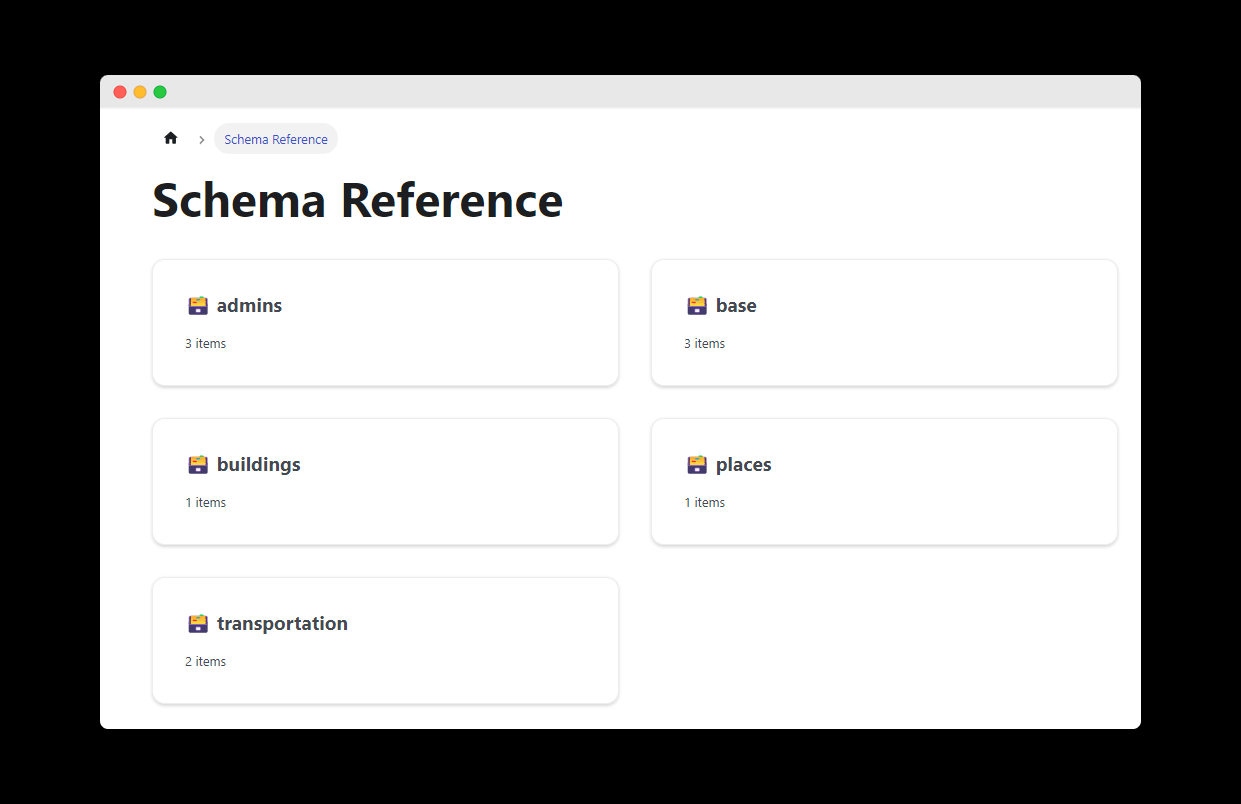

To select the needed columns, I had to check the schema reference for each data theme. It's available on the Overture Maps Foundation documentation here.

Next Steps

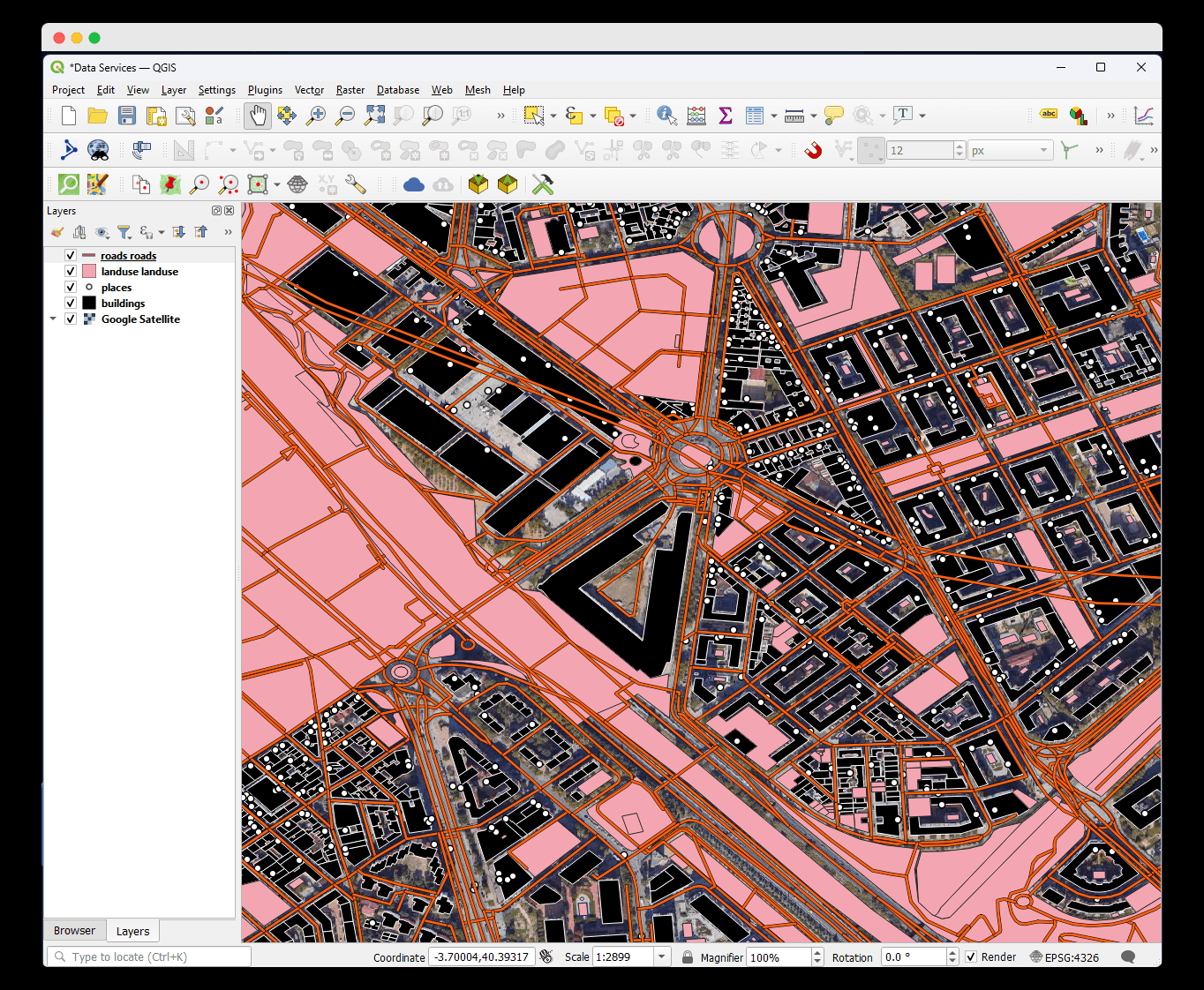

Unfortunately, as of the writing of this article, ArcGISPro can not open a FlatGeoBuf file. So I visualized the data in QGIS. You can explore the following options:

Instead of using FlatGeoBuf, you can directly use "Esri Shapefile" as the output format in the duckdb query.

You can also use fiona or any other libraries to convert the FlatGeoBuf file to Shapefile, or your format of choice.

You can loop in arcpy to load the data into your ArcGISPro project, to continue with your workflow.

The good thing is that, the possibilities are limitless, thanks to the interoperability of the tools in the geospatial community.

Conclusion

In this article, I discussed how to access the Overture Maps Foundation datasets using ArcGIS Pro Notebook. I also shared my personal experiences and how I overcame the challenges I encountered. I hope you found it helpful and that you learned something new.

This article didn't go into details of duckdb, and the Overture Maps Foundation, because there are already great resources on the internet that did justice to these topics. To learn more about Overture Maps Foundation, you can check out the official documentation, and you can find a good YouTube playlist by Dr. Quiseng Wu to learn more about duckdb here (exerpt below).

Thank you for reading.